Let’s be honest. We have no idea what is going to happen a year, a week, or even five minutes from right now. And what’s worse, believing we do means we are not only kidding ourselves, but it’s almost certain we will not achieve our desired result.

Rarely do predictions come true. We live in a complex world that frequently and without warning changes around us. It’s a system of constant flux, shape shifting and randomness. Yet still the prevailing approach to deal with this uncertainty is to seek and expect statements of assurance, predictability and guaranteed success. Why? We all know the numbers, facts and figures served up are fiction.

We need to change our mindset.

It’s time to optimize to be wrong, not right.

Invest in information

Imagine, if you will, how a financial trader operates. With $100 to invest and many funds from which to choose, a trader would never bet their entire $100 in one single fund. Instead, they use a system that allows them to explore many possibilities while continually developing options for better investment decisions based on what they discover from exploring each possibility.

The strategy is to limit investment and spread the risk across a number of funds where the potential for a great reward is offset by the potential for a small loss. Instead of investing $100 in one fund they invest $1 in five different funds to find out which is the best investment. It’s a quick, inexpensive way to test the market. From there the trader can pull out of weaker investments with little money lost and increase investments in higher-performing funds. They are paying for information, as information has value.

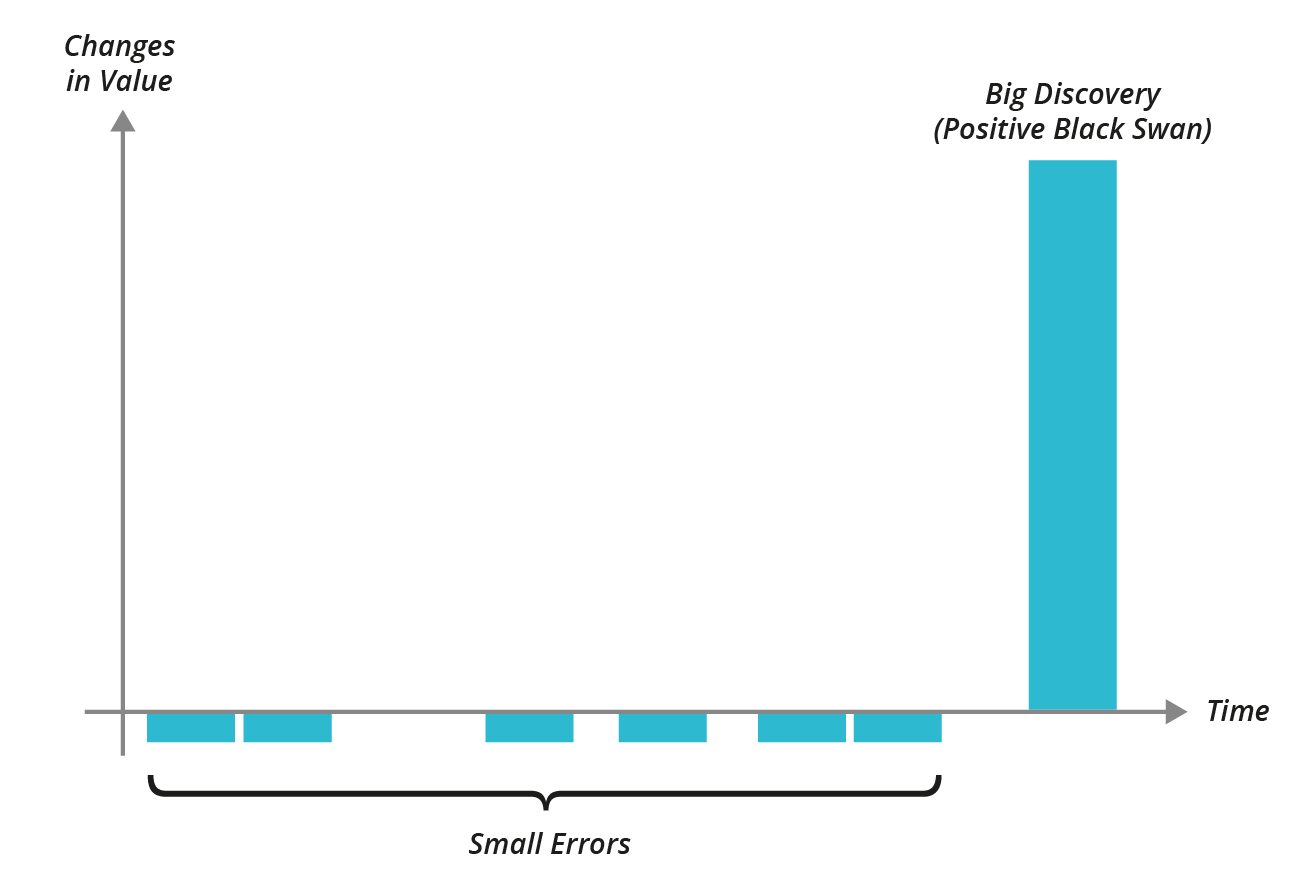

Figure 1: Antifragile by Nassim Nicholas Taleb

This system and strategy embraces the principle of optionality. The majority of options will turn out to be bad options but the point is that those options are now visible as such. This means they can be abandoned before we commit major financial, time or emotional investment in them. If it becomes clear that the investment is not going in our desired direction, we still gain value information for that investment plus we’ve narrowed our cone of uncertainty.

Accepting that the majority of your ideas and/or methods aren’t going to work out as expected is liberating, but more important its advantageous. Yet, counterproductively, the majority of business organizations, teams and individuals resist the reality of the world, seeking certainty before making a move, or worse, believe their Industrial-Age governance process to serve up scope, time and budget is a proven approach to predict success. If anything, they invest more time, money and resources refining and refining it.

So, why do people behave this way? Why do we frame the certainty of result when we have little evidence to support it aside from experience, case studies and statements of “<insert famous tech company> does it” to back up our claims? Furthermore, why do we continue to use processes that are biased to predict the undeniable success of our idea and seek to validate our hypothesis rather than test to invalidate it?

Most companies and individuals are stuck in an unhelpful rut of scrambling to prove our ideas and methods right rather than prove them wrong, or at least find their weaknesses so we can strengthen them and build something better than what existed at the start.

People tend to forget that the measure of progress for innovation is not how many “good” ideas you validate, but actually how many you invalidate quickly and inexpensively. Not over-investing in ideas and/or methods with little or no traction frees up investment and time to pursue alternatives based on valuable information we obtain from invalidated experiments. This optionality is what helps us all make better decisions when dealing with conditions of extreme uncertainly, which is of course inherent in any innovation activity.

Optionality works on negative information, reducing the space of what we do by gaining knowledge of what does not work. For that we need to pay for negative results. The information collected through board experimentation helps to narrow the cone of uncertainty, and inform our next best step, action or newly discovered options. The more options you have, the more you are able to explore different opportunities, meaning you can test quickly a variety of ideas without investing in an entire product launch and committing to it too early.

Counterintuitively, for most organizations the management of unpredictable innovation requires providing certainty of the results. High-performance organizations crave unpredictable results, but certainty in the management of innovation.

A rigid business plan, product roadmap and/or set of requirements forces people to do whatever it takes to prove that the idea or method is a good one. If we are determined to convince others that a certain result is possible, we will lose sight of all of the alternative possibilities and potential options available along the way.

Success doesn’t happen in a straight line, which is why it’s important not to let our business idea tie us down too hard, too early. The market may change, customer needs fluctuate and ideas evolve. If we cling too tightly to an idea or method that doesn’t work, then we’ll go down with it.

When a large amount of effort is required to just get an idea off the ground, it’s easy to become overly invested (time, money or emotionally) in that first idea. Similarly, simply hoping you’ll iterate your way to the answer is its own death march and time sink to excessive investment. Writing the funding documents, roadmaps and dates, schedules and scope slowly starts to close your mind to alternative exit ramps to get off the highway to hell.

Instead, making multiple small investment to experiment—to dig deeper and find out whether your hypothesis is true or false (or somewhere in between)—means at worst you’ve lost a small amount of time, money and resources but learned a lot. From there, you can synthesize what you have learned and feed it forward into your next round of experiments while continuing to run relatively inexpensive experiments with small risk that cover a broad base of hypotheses. Now, you’ve created a recoverable situation and one with which you can work. Even better, we can scale this method up to match our appetite.

One option is not a real option

So, how could you even start to test ideas quickly, discover information and determine whether your innovation is on the right path? There are many ways of getting there. But one I like is Toyota’s Set-Based Concurrent Engineering, or SBCE for short. Toyota uses SBCE at the concept stage for new-product development when there is extreme uncertainty regarding potential new solutions. Therefore, they plot a path to explore numerous sets of solutions in parallel to test, inform and discount potential options of the final solution. This process is used for everything from new steering wheels to suspension systems for cars.

In SBCE, cross-functional teams design, develop and test sets of conceptual solutions in parallel. As the solutions progress, teams build-up understanding, knowledge and evidence about the sets. Each iteration allows the team to gradually narrow these sets by eliminating bad and/or infeasible solutions based on the outcomes of their experiments.

Even as they narrow the sets they are considering, teams commit to staying within them so that others can rely on the sets to continue to remove further uncertainty from the overall problem space. At the end of a cycle, designs are compared, cut and/or progressed with improvements based on learning from the other teams. SBCE enables deferring commitment until the last possible moment and optionality.

Figure 2: Toyota’s Set Based Concurrent Engineering

Toyota appears to judge uncertainty based on experience and simple (often unwritten) rules such as, “If there’s only one solution and we have not established what it will cost to produce, then the set is too small.”

While the Toyota SBCE standard process provides guidance, the chief engineer is empowered to customize the standard to their particular situation. For instance, failures to reduce uncertainty at the proper time or cycle turn into emergencies. Not having enough options to explore is in itself an emergency, with all effort focused on resolving the problem.

When design happens in large batches and/or increments, we can’t explore all the different possibilities and the system may fail. Simultaneous small experiments enable us to experience many small options, outcomes and failures, each of which gives more information about how the design should be modified. In essence, the system becomes more resilient with each failure, which aligns to Nassim Nicholas Taleb’s definition of Anti-fragility.

Embrace opportunities

Optionality is a portfolio of de-risked opportunity, meaning it can be applied features, products, even portfolios of products for businesses.

The trick is to change our exposure to rare events in a way that we can benefit from them. When uncertainty is high, maximizing your exposure to exploring many possibilities while investing a relatively small amount to test a broad range of hypotheses yields large amounts information to inform better decision-making of what to invest in next—all while simultaneously limiting risk.

New product solutions emerge by feeding forward the information collected from multiple experiments that did not lead to success. Remember…

Product development is not about developing products. It's about developing knowledge about the product. Great products emerge as a result.

— Barry O'Reilly (@barryoreilly) March 11, 2017

References

Antifragile: Things That Gain from Disorder by Nassim Nicholas Taleb

http://sloanreview.mit.edu/article/toyotas-principles-of-setbased-concurrent-engineering/

http://seedcamp.com/resources/be-nimble-keeping-milestone-optionality/

http://www.slideshare.net/MarkHart2/antifragility-in-new-product-development

https://whatsinnovation.com/2016/06/06/the-time-tested-value-of-optionality/

https://25iq.com/2013/10/13/a-dozen-things-ive-learned-from-nassim-taleb-about-optionalityinvesting/

https://en.wiktionary.org/wiki/optionality